The Lean Privacy AI Playbook: An Essential Framework for Small Privacy Teams

A practical framework to help small teams run a lean, defensible, modern privacy program with automation and AI.

If you’re running a privacy program with little budget, no headcount, and no shortage of work to do… you’re not alone. Organized by Dwight Turner, “The Lean Privacy Playbook” became one of the most attended sessions at IAPP’s 2025 Privacy. Security. Risk. + AI Governance Global conference (“PSR”). The panel focused on strategies for small and mighty privacy teams to not only stay afloat, but excel, even with frozen budgets, evolving regulatory demands, and increasing risks.

Inspired by discussions at PSR, the Lean Privacy AI Playbook offers a practical and repeatable framework for running a defensible modern privacy program powered by automation and AI without sacrificing judgment or security.

Who needs the Lean Privacy AI Playbook?

Imagine as a team of one, you lead all of your company’s privacy efforts. You review new features being shipped every week, vendors onboarded every day, and an inbox overflowing with data subject requests.

Much of your work feels like applying bandaids on a big wound, and you know more shortcuts won’t fix it. Sound familiar? Then this playbook is for you. Lean privacy isn’t about cutting corners. It’s about building a repeatable, accountable, and automated operating system that protects your company and gives you back time to focus on higher-impact work.

You’re facing three realities:

1. You own too much by default. Privacy is a hot potato. Engineering, Marketing, Product, and Security need to contribute to privacy, but unclear ownership means the work quietly lands back on you.

2. Regulators expect evidence, not effort. If a regulator, auditor, or litigator asks how a decision was made, “we did our best” isn’t enough. You need defensibility: policies, audit trails, version control, and consistent scoring frameworks.

3. Manual work introduces more risk than it removes. Spreadsheets, emails, and ad hoc reviews don’t scale — and they create inconsistencies that increase risk. “Do nothing” is your biggest enemy.

You can’t wait on miraculously being granted a budget for your dream team to start tackling these crucial tasks. It’s time to start filling gaps and making the most of your existing resources now.

This playbook breaks down simple strategies for prioritization, automation, and accountability so that you can stop drowning and start building towards your long-term vision.

1) Prioritize your privacy work

Most privacy tasks feel urgent, but only a subset actually protects the business from legal, financial, and reputational exposure. Lean teams anchor around one question: “If asked tomorrow, could we prove how and why we made this decision?”

Focus on your minimum viable defensibility. You need:

- Transparent insight into the risks actually threatening business exposure.

- Audit trails for data subject requests (DSRs), vendor decisions, and AI evaluations to showcase oversight.

- Accountable collaborators across every team to share ownership of privacy.

Prioritize the workflows that materially reduce exposure: automated DSRs, accurate data maps, consent governance, and structured vendor/AI evaluation.

- If you’re concerned about DSR fulfilment, start with the intake experience, ID verification process, audit the completeness of data returned to the data subject, and security of the process.

- If you’re worried about consent governance and opt-outs, begin by making sure trackers stop firing after an opt-out, confirm you observe global privacy controls, and ensure the placement of your Privacy Choices link is intuitive.

- If you’re focused on your public privacy policy, make sure data use is fully outlined and all service providers are documented.

If you’re really not sure where to start, check out the 2025 Data Privacy Trends Report for insight on where most businesses are facing real privacy risks today.

2) Automate repetitive tasks

Realistically, most privacy work is repetitive, structured, and rules-based. Manual execution doesn’t make it safer. Manual execution actually makes it slower and riskier. These are the tasks you should allocate to AI and responsible automation, reserving human judgment for escalations and special cases.

Use AI as your always-on Privacy Analyst who drafts, summarizes, compares, and flags issues. You’ll remain the final reviewer.

Start with three common use cases: your privacy policy, your vendor contract reviews, and your AI risk assessments. Below we’ve included a link to each matching prompt.

Privacy Policy Risk Audit

Best case scenario, you inherited a privacy policy created by outside counsel with limited context for the actual business. Reviewing the privacy policy yourself is a great way to get your heart rate up while you visualize an ever-increasing to-do list. Your company’s privacy policy is already public, so it’s a great low risk option to have Gemini, Claude or ChatGPT review for you.

Get the privacy policy risk audit prompt.

Vendor Contract Review

Joining the contract review process during vendor procurement is a typical first step for a new privacy team, and you can make a good impression on colleagues by completing reviews efficiently in a structured way. Have AI take a first pass at using public documents to detect and triage the red flags regulators are looking for.

Get the vendor contract review prompt.

AI Risk Assessments

Just about every vendor is adding new AI capabilities to their product offering: how do you keep up and help your organization think critically about which partners to innovate with? AI regulation is evolving even faster than privacy regulation and requires an even deeper technical understanding to interpret effectively. You should make the final call, but you can use AI to help you compare an AI offering against shifting global AI governance regulations.

Get the AI risk assessment prompt.

As you start to deviate from these prompts and create your own, keep in mind:

- Avoid putting company or sensitive data into AI systems that won’t protect it. (Need help evaluating the safety of your AI tools? Review these tips on AI procurement.)

- Most LLMs are designed to give you what you want. Consequently, AI could under or overestimate risk depending on how you write your prompt. As silly as it may feel, giving the AI model a clear role to play can result in more consistent results.

- Similarly, AI thrives on context. The more you can tell the AI model about your priorities, the better AI can deliver them. For instance, if you want ChatGPT to report relevant privacy news to you, you could tell ChatGPT your company’s industry and any specific privacy regulations or litigation themes you’re monitoring to get better results.

- Regulators are very open to the use of AI to scale privacy work, when it is in conversation with human judgment. Document both AI and human decisions to show that AI was used as a tool to support privacy, not a deflection or evasion of privacy work.

- Anchor everything in your company’s responsible AI practices policy. If they don’t exist yet, that’s your opportunity to demonstrate your privacy program’s leadership. Refer to our guide on creating a responsible AI use policy.

Of course, AI prompts aren’t the only way to automate privacy work. If you’re a DataGrail customer, make sure you take advantage of the automation and responsible AI built into your privacy platform:

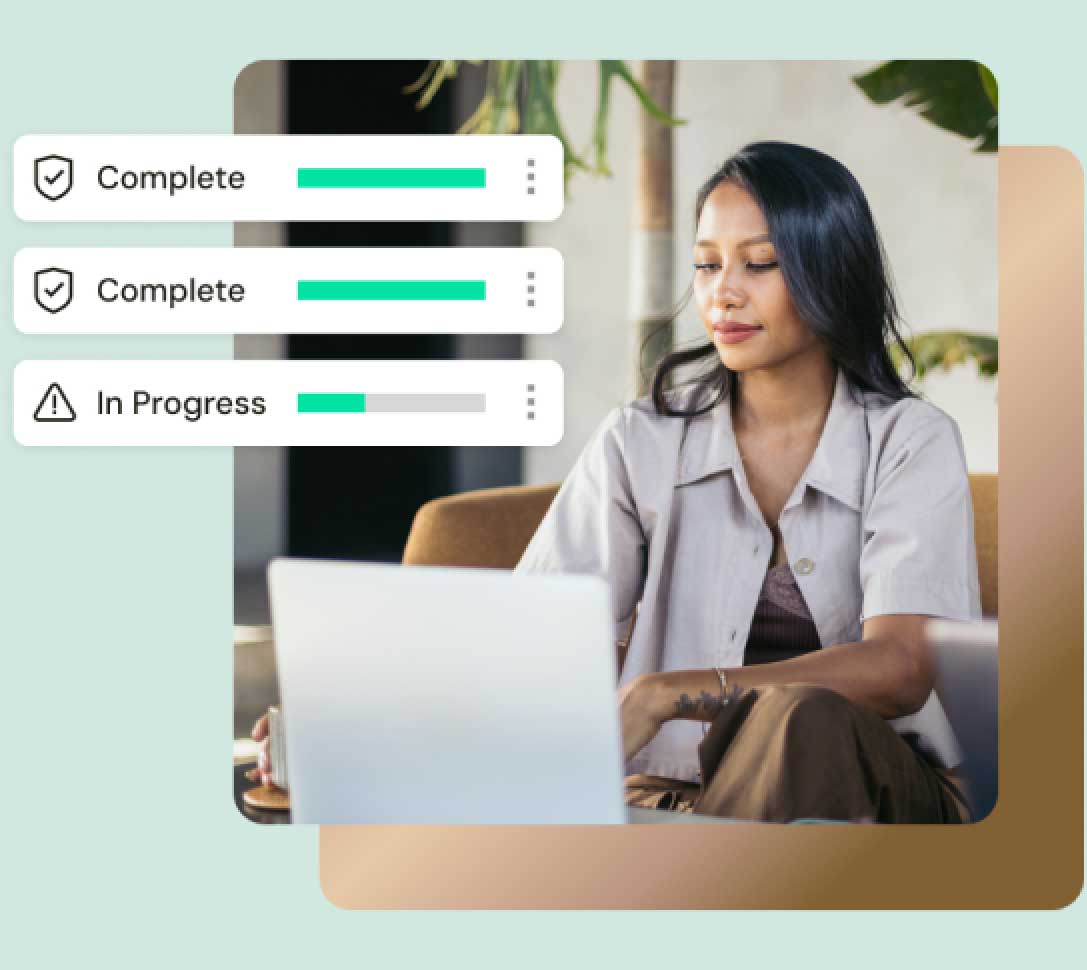

- DataGrail Request Manager: Data Subject Request (DSR) intake, routing and fulfillment, including through workflows customized to your system, data journey, and privacy policies

- DataGrail Consent: Continuous scanning, real-time regulation updates, and AI-recommended cookie rules

- DataGrail Live Data Map: Patented system detection, no-scan AI-powered instant risk insights, AI-suggested processing activities and pre-filled Record of Processing Activities (RoPA) and Data Privacy Impact Assessment (DPIA/PIA) fields, structured and unstructured data discovery and classification

3) Establish cross-department accountability

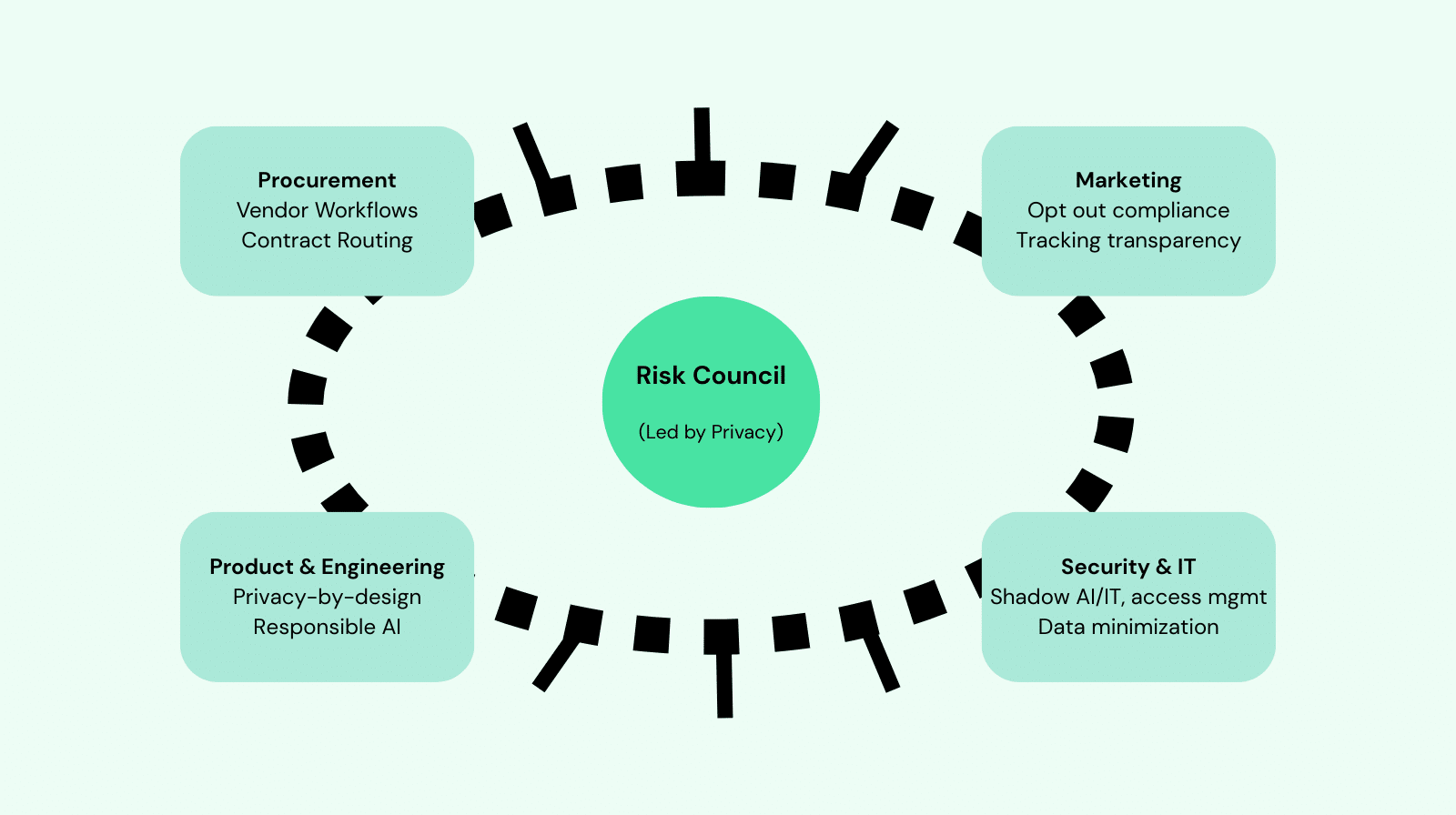

Privacy collapses when ownership is unclear. But you can’t own everything. To stay lean, you need distributed responsibility with clear, assigned, documented owners.

The best lean privacy teams invest time creating champions across the business through cross-functional risk councils and similar groups. However you structure it, consider assigning accountability by function:

- Engineering and Product are responsible for privacy-by-design and AI model documentation

- Security and IT are responsible for access management, data minimization, and shadow IT remediation

- Marketing is responsible for consent governance, cookie compliance, tracking transparency

- Procurement is responsible for vendor evaluation workflows and contract routing

At the center of this spiderweb, you’ll monitor and ensure the system is working. You need dashboards, routing rules, and SLAs to avoid bottlenecks and over-reliance on you.

4) Tap into the privacy community

Don’t forget: you’re never alone in this. Thousands of other privacy practitioners are in exactly your shoes. If you can’t make it to PSR to find them, join us on Privacy Basecamp. Our Slack community offers breaking news and best practice discussions for privacy teams of every size and shape. Be sure to join the #ai-labs channel for insights from fellow privacy professionals on their own AI privacy experiments.

You don’t need a big team to run a strong privacy program. You need prioritization, automation, and accountability. Once you’ve built the start of each, you’ll be ready to grow and expand your privacy program with time. Show some results, and you’ll be ready to pitch a bigger budget to your CFO in no time.