AI is accelerating—without a clear owner for governance.

As artificial intelligence becomes increasingly central to business operations, so does the urgency of managing its risks, ethics, and oversight. But here’s the problem: there’s no clear owner of AI governance. While privacy, legal, security, and other teams all touch it—none are explicitly accountable for it. And that’s a problem.

1. Privacy, Legal, and Security All Claim It

AI impacts sensitive data, compliance, intellectual property, and risk—so naturally, multiple departments feel responsible.

- Privacy teams are worried about how AI handles personal data—especially with regulations like GDPR, CPRA, and the looming U.S. national privacy framework.

- Legal teams are focused on liability, copyright (hello, AI-generated content), and contractual language surrounding AI use.

- Security teams worry about model exfiltration, hallucinated vulnerabilities, and prompt injection attacks—valid concerns with real-world implications.

👉 Check out this CISO perspective on privacy and AI – it’s a great example of how security leaders are stepping into the AI conversation through the privacy lens.

But overlapping ownership can lead to blurred lines, missed handoffs, and conflicting decisions. And worse—it can stall meaningful governance altogether.

2. No One is Designed to Own It, Yet!

Here’s the catch: no role today is purpose-built to own AI governance. Even the Chief AI Officer—where they exist—may be focused more on enablement than control.

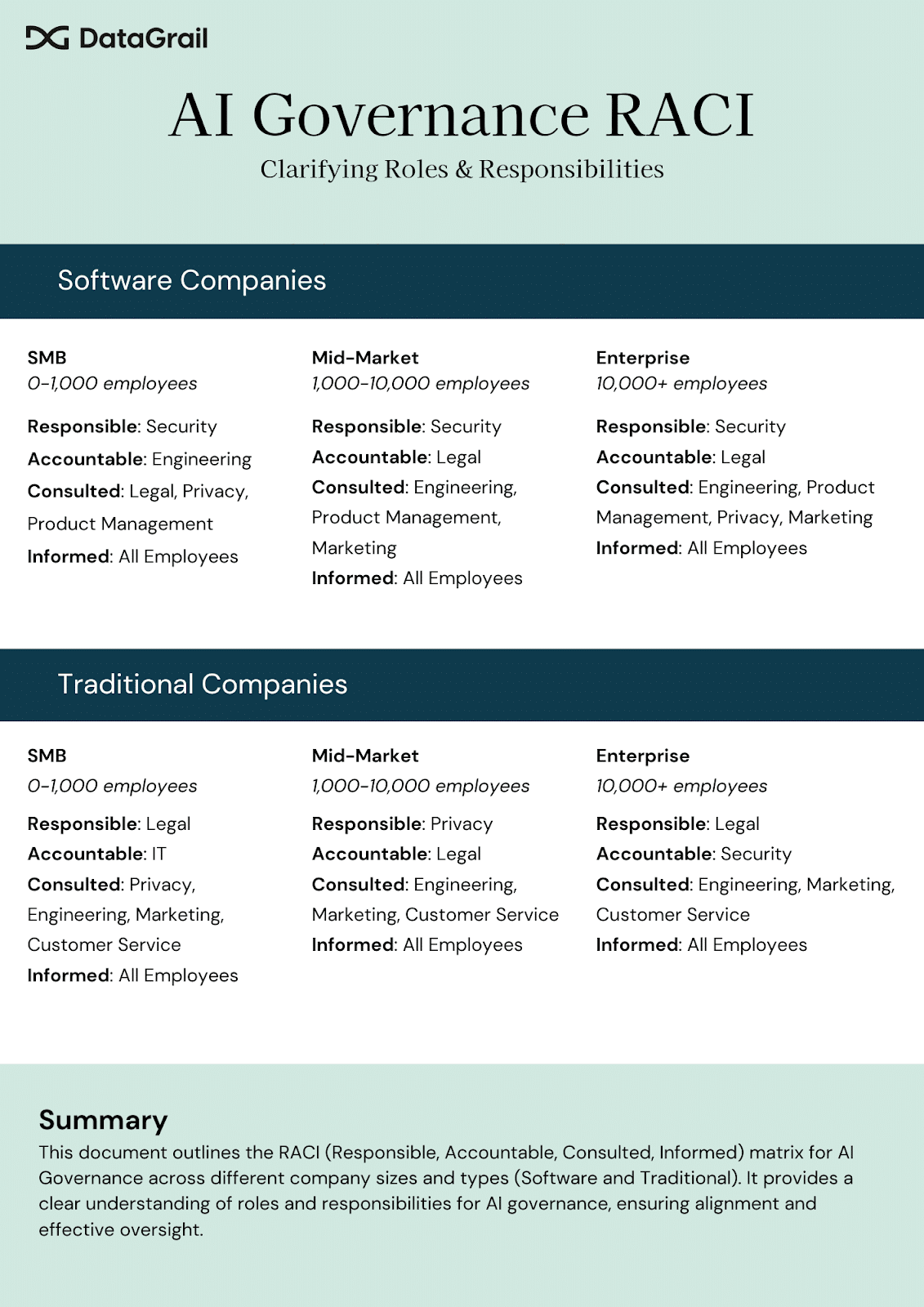

AI governance often falls into a “shared responsibility” model. That sounds collaborative—but it often translates to a RACI matrix with too many “informed” and not enough “accountable.”

What does a typical RACI look like?

3. Today, It’s Often Under Privacy—But That May Change

In the absence of clear ownership, privacy teams are often stepping in to fill the void. They’re already versed in compliance, risk, and data governance—and AI naturally overlaps with all three.

But privacy teams aren’t always equipped to govern things like:

- Model explainability and bias detection

- Training data lineage and technical accuracy

- Open-source model licensing risks

- Ongoing monitoring for hallucinations or drift

And coverage varies by company size and industry:

- Tech-forward organizations (especially in SaaS or data-driven industries) may have robust data governance or AI risk teams already in place.

- Traditional or smaller enterprises—especially those with <10K employees—may not have formal AI oversight at all, relying instead on privacy or IT to “figure it out.”

As AI matures, we may see new roles emerge: AI Risk Officers, Algorithmic Impact Auditors, or even dedicated AI Governance Committees.

The Bottom Line: Governance Can’t Be an Afterthought

AI is moving fast. Governance needs to catch up. Whether you’re deploying LLMs internally or embedding AI into your product, someone needs to own the risk conversation. That might be privacy today—but the future will likely demand a more specialized, cross-functional solution.

Why is AI governance important?

AI governance ensures that artificial intelligence systems are used responsibly, ethically, and in compliance with regulations. Without governance, organizations risk exposing themselves to legal liabilities, reputational harm, and security vulnerabilities.

Who should be responsible for AI governance today?

Right now, no single role universally owns AI governance. In many companies, privacy, legal, or security teams step in—but each has limitations. Ultimately, AI governance often requires a cross-functional approach until dedicated roles or committees emerge.

How does AI governance differ from data governance?

While data governance focuses on the quality, security, and compliance of data, AI governance extends further. It addresses issues like model transparency, algorithmic bias, responsible AI use, and the ethical implications of automated decision-making.

What are the biggest risks of not having AI governance?

The risks include regulatory penalties for misuse of personal data, biased outcomes that impact customers or employees, intellectual property disputes over AI-generated content, and security vulnerabilities from malicious model manipulation.

How can organizations start building an AI governance framework?

Companies can begin by:

- Mapping AI use cases and associated risks

- Defining accountability through a clear RACI or committee

- Integrating AI considerations into existing privacy, security, and compliance programs

- Establishing ongoing monitoring for bias, drift, and misuse

- Preparing for evolving regulations and industry standards

It’s hard to stay on top of privacy risks you can’t even see. DataGrail gives you full visibility into your entire tech stack, highlights where risks and personal data may be hiding, automates tedious processes, and makes sure you’re staying compliant. Learn how DataGrail can help your team stay compliant and build trust.