AI & Data Privacy

Snapshot of the History of AI & Regulatory Landscape

Given generative AI’s transformative power, companies are scrambling to figure out how it can best be used to further their businesses. There is a fervor around it, unlike anything we’ve seen since perhaps the debut of the iPhone. At the same time, consumer advocates and technologists are sounding the alarm about privacy and security issues. People, governments and institutions are taking action.

In late 2023, EU lawmakers agreed on the core elements to regulate AI, and it’s expected to pass in 2024. It will require foundational AI models to comply with transparency obligations, and will ban several uses of AI, including the bulk scraping of facial images. It will also require businesses using “high-risk” AI to assess their systemic risks and report them. The EU AI Act has transparency requirements, including informing users that they are interacting with AI.

For higher risk use cases, that could potentially harm health, safety, fundamental rights, the environment, democracy, or the law, there are stricter obligations. That includes mandatory assessments, data governance requirements, registration with an EU database, risk management and quality management systems, transparency, human oversight, accuracy, robustness, and cybersecurity requirements.

In the United States, we’re also seeing some action. The California Privacy Protection Agency (CPPA), the state’s enforcement agency, recently released its draft regulatory framework around “automated decision-making technology” (its description of AI), giving California residents the right to know in advance when automated decisioning tools are used and opt-out of their data being used in AI models. In addition to California, a number of state privacy laws have rules against profiling people for behavioral advertising purposes——analyzing and predicting personal preferences, behaviors, and attitudes. Some states also have opt-out of automated decision making requirements.

In June 2023 the U.S. Congress introduced legislation aimed at regulating the government’s use of AI and monitoring its competitiveness relative to other countries, and recently an executive order was signed that prohibits or restricts U.S. investments in Chinese AI systems.

Timeline of Action Against AI

-

21 April 2021EU AI Act proposed.

21 April 2021EU AI Act proposed. -

January 2023NIST releases AI RMF (Risk Management Framework)

January 2023NIST releases AI RMF (Risk Management Framework) -

March 2023Italy briefly banned ChatGPT until it addressed the country’s concerns and put measures in place to reassure that EU citizens’ data privacy rights were being observed.

March 2023Italy briefly banned ChatGPT until it addressed the country’s concerns and put measures in place to reassure that EU citizens’ data privacy rights were being observed. -

April 2023Spain and Canada have launched probes over similar concerns, and other countries have considered such precautionary actions as well.

April 2023Spain and Canada have launched probes over similar concerns, and other countries have considered such precautionary actions as well. -

April 2023FTC Chair Khan and Officials from DOJ, CFPB and EEOC Release Joint Statement saying they will enforce existing laws regardless of technology & AI.

April 2023FTC Chair Khan and Officials from DOJ, CFPB and EEOC Release Joint Statement saying they will enforce existing laws regardless of technology & AI. -

June 2023Congress issued first bills to regulate generative AI

June 2023Congress issued first bills to regulate generative AI -

July 2023Sarah Silverman sues OpenAI over copyright infringement

July 2023Sarah Silverman sues OpenAI over copyright infringement -

October 2023President Biden issues AI Executive Order

October 2023President Biden issues AI Executive Order -

December 2023EU agree on core AI Act regulations

December 2023EU agree on core AI Act regulations -

December 2023NYT files lawsuit against OpenAI

December 2023NYT files lawsuit against OpenAI -

Early 2024EU AI Act to be adopted.

Early 2024EU AI Act to be adopted.

Generative AI Risks & Considerations

for Adopting AI in the Enterprise

AI Risks to Business, People & Society

There are many benefits of AI, yet a lot can go wrong if we don’t look ahead and establish guardrails on how we use AI responsibly. Data is at the heart of the issue, for both good and bad. Data feeds the models, and without data, the models would be worthless. The biggest question we need to ask ourselves is: How do you responsibly and ethically collect and use data in your AI models without introducing risk?

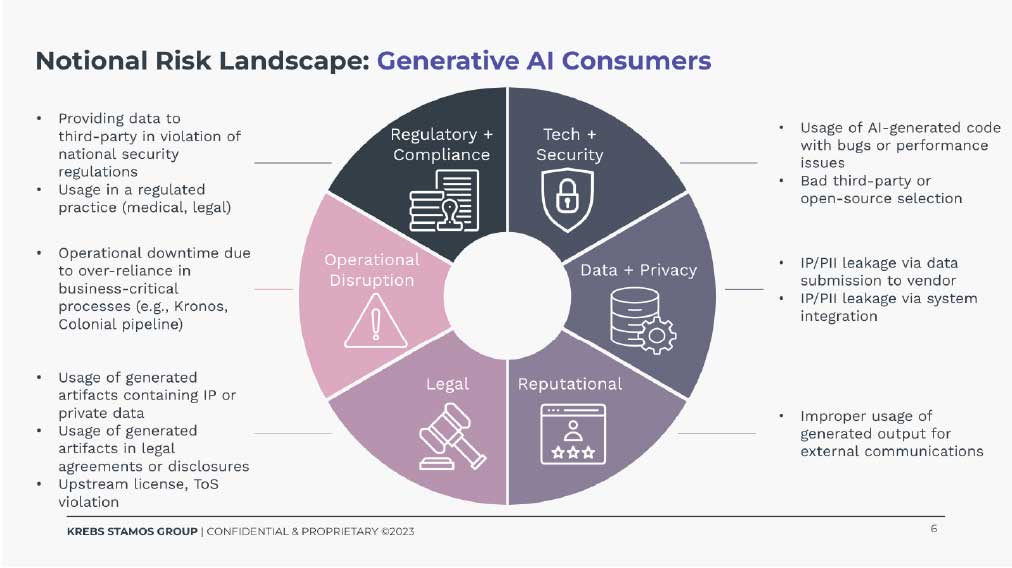

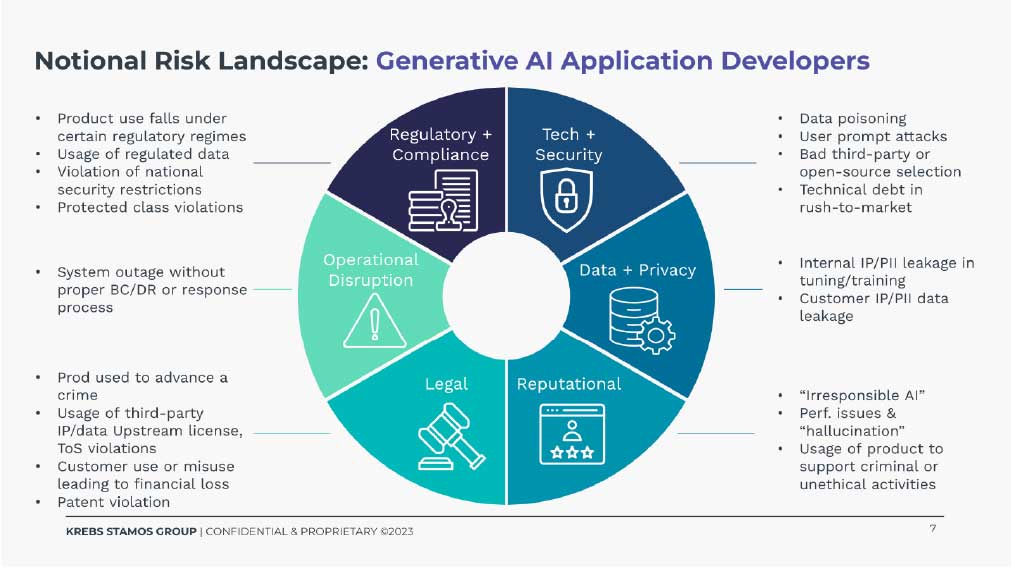

Businesses need to think about how they responsibly, legally and ethically use AI, and understand risks so they can address them. The risks are numerous, but can be categorized into four key buckets:

In late 2023, DataGrail welcomed Alex Stamos, one of the leading thinkers on cybersecurity and Generative AI, to keynote our inaugural DataGrail Summit. Below we’ve included slides he shared on how he organizes AI risks. He buckets the risks into two categories: the risks of people (aka emplloyees) using generative AI, and the risks of application developers using GenAI in the products they build. (Access Alex Stamos's full keynote here.)

Of course there are larger threats to society as well. There are also risks to individuals including the risk of exclusion, bias, harm regarding racism, sexism, ageism, veteran discrimination, etc. Businesses must consider their role when misinformation proliferates. As we’ve seen, human thoughts and behaviors can change at scale as a consequence.

To minimize the risks associated with adopting AI, we recommend that businesses follow this five step approach. It starts with documenting your principles around the ethical use of AI to discovering and monitoring its usage within your business so you can put controls into place to actively control its usage.

Step 1: Create an AI Taskforce

While AI risks are technical in nature, the risks affect everyone and aren’t purely technical. Companies that want to be thoughtful about this, create a taskforce of executives to oversee and understand the company’s use of AI executives. Your stakeholders should include your General Counsel, the CISO, the CIO, and product development leaders. Regular communication from this taskforce to the broader executive team will be key.

Security teams will likely own operationalizing the management and monitoring of AI use, but they need support from others as the risks go far beyond information security—and outside the purview of the CISO. AI committees should consider seeking technical partners who can help identify AI risk within your organization, vet third-parties vendor AI use, and operationalize the AI risk assessments that will be core to complying with future regulations.

Step 2: Build an AI Ethics Principles and Policy

AI regulations are in flux and taking shape now, so it's hard to concretely know what is required by law. What you can do in the meantime is chart your company's path forward based on ethical principles to encourage the responsible use of AI. The first step to determining how to govern AI usage, is to look inward at your company’s own ethics, to build a set of guiding principles: know your why behind AI. Google has done an excellent job at outlining why and how it uses AI. Similarly, Salesforce has an excellent overview of its AI principles. Google regularly updates its principles as we learn more about AI and its potential application—and that’s okay. No one is going to get their policy exactly right on the first go-round, being nimble and updating it regularly should be the norm.

Know Your Why Behind AI

The first step to developing your principles is knowing why you want to adopt generative AI. Ask yourself, what are the outcomes you hope to achieve, and are they worth the potential risks?

Common answers could be to:

- increase productivity

- improve and personalize experiences

- enhance creativity

- reduce costs & boost scalability

- gain a competitive advantage

As you understand what you want to achieve with AI, values come into play. What are your corporate values, and how can they be applied to responsible use of generative AI? For any AI ethical use policy to be effective, it has to be meaningful and work within the scope and culture of your organization. DataGrail developed a playbook that guides you through the steps to take to build your AI Use Principles and Policy. Included is our own policy and the steps we took to create our own principles.

Step 3: Discover Where You’re Using AI Across Your Business

Let’s not forget, AI has been around for a while now. You’re probably familiar with traditional AI, used for predictions like the search engines like Google, YouTube, Amazon and Netflix. Newer on the scene is Generative AI which creates new content based on learned patterns.

You probably already adopted a fair amount of AI in your business, whether your R&D or product teams are building it into your products, or you might be using third-party software that’s already incorporated AI into its products.

Four Types of AI Use Cases in A Business That Lead to Risk

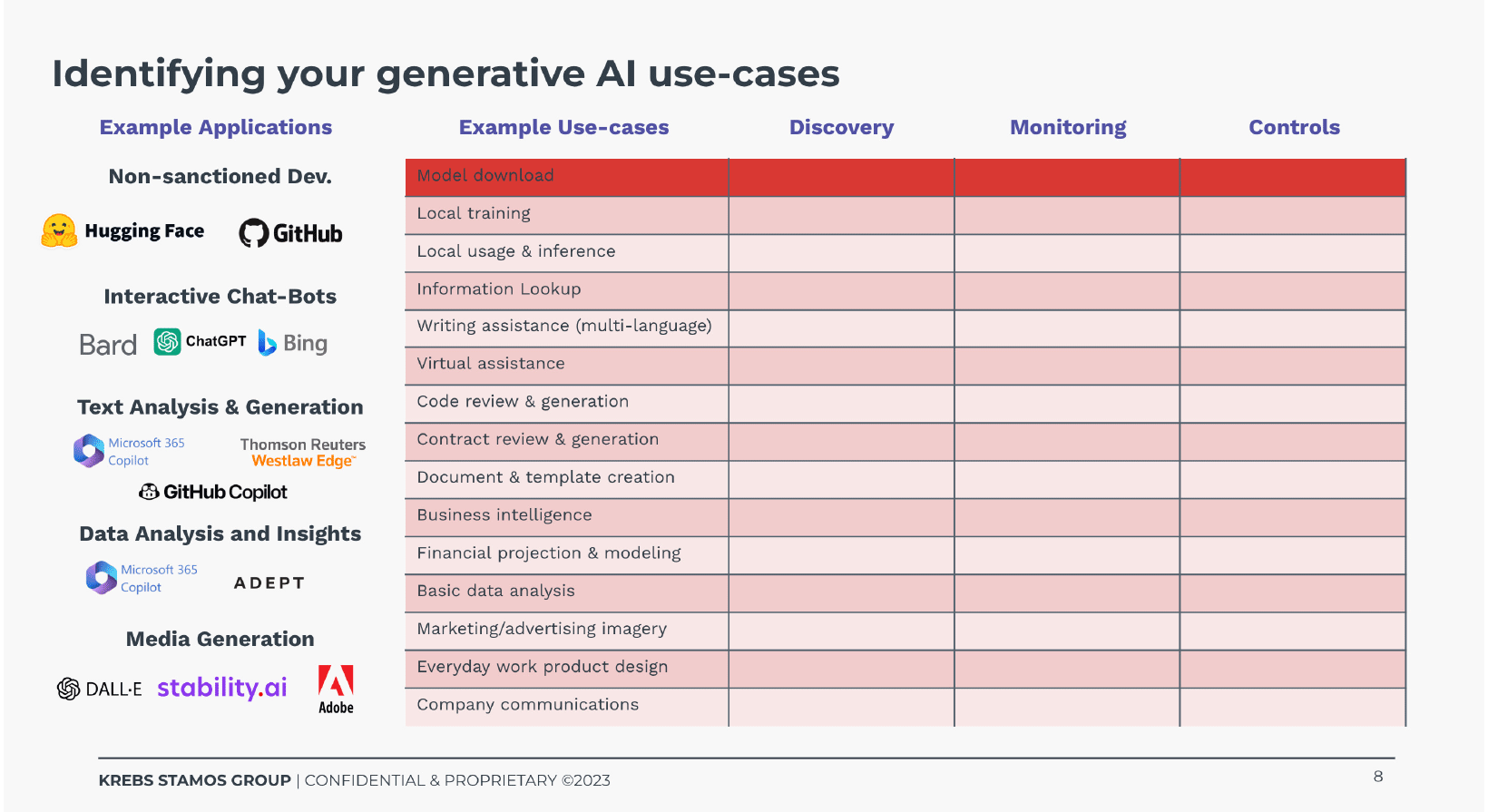

Take a moment to audit and discover where AI might be in your business, and where you may have AI risks.

AI in the Wild

AI tools (generative or otherwise) available in the public domain where employees may be using for work related purposes, potentially using data sourced at work. This includes ChatGPT, MidJourney, Bard, etc., with one of the risks being personal data, IP, or trade secrets being uploaded into the model without authorization. This class of AI use requires a Responsible Use Policy. It needs to be socialized broadly so employees know the policy, and how to apply it.

AI in Your Supply Chain, aka Embedded AI

Many SaaS solutions build Generative AI into their systems, which filters its way into your business (see Figure XX, a visual done by Alex Stamos, presented at DataGrail Summit). This is one of the biggest challenges businesses will face when trying to identify and monitor AI usage and has been happening for years now with little or no governance as part of third-party risk management. This is the scenario where you adopt a SaaS solution to increase productivity across any area of your business, but you’re unsure of how their AI usage will impact your business.

Hybrid AI

This class of AI use is potentially the most risky, depending on the data going into the foundational models. It includes enterprise AI capabilities that are built in-house using one or more foundational models (generative or otherwise) complemented with internal enterprise data. The foundational models are a black box with minimal capacity to risk-assess their makeup—especially if the data that made up those models isn’t well understood. It could lead to bias, copyright infringement, and more

AI In-house

This class of AI focuses 100% on AI models and LLMs that are trained, tested and developed internally where the organization has full visibility over the inputs, the configurations and the subsequent adjustments made to the models. Again, depending on the data inputs, there can be risks.

In the absence of tools to control the rapid adoption and sprawl of AI use, businesses are turning to existing solutions and processes they have in place to discover and monitor AI used throughout their organizations. Sample tools available to discover AI, are data privacy data mapping solutions, data discovery solutions, or some DLP solutions.

Step 4: Track & Monitor AI Opportunities and Risks

Once you've identified where you’re using AI, you need to actively monitor it to keep track of where and how you’re using it. It’s likely that both the EU (via the AI Act) and California (via the new California Privacy Protection Agency) will require businesses to understand how they use AI, or what California dubbed “automated decision-making”, to enforce key privacy regulations. In December 2023, California regulators signaled their intent to broadly regulate AI, requiring pre-use notices prior to using automated decision making technologies, implementing consumer rights to opt out of their data being used by these tools, and to access information about businesses’ use of such technology.

We found that companies can leverage a standard Privacy Impact Assessment to create AI Risk Assessments, which will be useful for both the EU AI act and California’s regulations. DataGrail’s can help you uncover AI risks in your third-party Saas. For example, is your data used to train their models? If so, is it anonymized? We can help you figure this out.

It is important to set up regular audits to ensure you are following your Responsible Use Principles & Policies. This should include conducting monthly audits or the roll-out of training to ensure that employees understand internal AI policies.

Consider setting up a dashboard that details how each of the categories relates to your company’s use of AI. Focus first on the discovery column before talking with your executives about the scope of your exposure. From there, you can design processes for identifying which parts of your system are at risk, the impact those risks could have, and how to mitigate potential consequences.

Tools to Monitor AI Use in the Wild

When it comes to monitoring AI usage, you might already have tools at your disposal. With network security tools, such as Zscaler, Cloudflare, or Netskope you can use logs to see if employees are connecting to ChatGPT. These network monitoring tools can help you get visibility into what AI solutions are being used throughout your business. If you discover a lot of activity, you can use data loss protection technology (DLP) or other capabilities to see what is flowing into ChatGPT. The DLP solution should identify flows of sensitive data, mitigating unsafe exposures in a SaaS app using AI. Some solutions will even offer coaching guides to adopt safe business practices when interacting with sensitive data.

In your vendor assessments intended to identify AI risk, quantify risk and ideally point to potential risk mitigation options, questions could include:

- Does the product leverage machine learning models or other AI capabilities hereafter referred to as AI services that required training data?

- What capabilities are made possible through these AI services?

- Can these capabilities be disabled and what would be the impact on the user experience?

- Does the training data used for these AI services contain personal information?

- If so, do you maintain a valid legal basis (such as consent) to use the personal data to train your AI services?

- Are these AI services dedicated to our organization or are they shared among enterprise customers?

- Do the AI services learn from our enterprise data?

Step 5: Establish the Building Blocks to Control AI

It will take time to discover and monitor where you’re using AI, where it’s being uploaded, and how interacting with an AI model may have downstream effects.

It’s still too early to know all the unintentional consequences of AI use. However, during the early stages of AI, there are some controls you can put in place to help manage its usage.

As discussed above, California is signaling “Automated decision making” (aka AI) is about to be regulated. Organizations will need to honor a person’s right to opt-out of their data being used with AI. DataGrail’s Request Manager solution can help orchestrate privacy data requests across an internal systemAI/LLMs to delete, opt-out, or access the data. Request Manager also provides necessary audit logs to demonstrate you have taken the required action to honor a person’s privacy request.

The real world implications of AI are still evolving and emerging. While you don’t know everything yet, you can build a framework and find strategic technology partners to help you discover, monitor and control elements of AI —so you can continue innovating in your business.

A Future Where Generative AI & Data Privacy Coexist

We must think differently about AI in relation to data privacy – the future of data is not about how much we collect, but how responsibly it is used and how we can realistically safeguard it so that we get the best out of AI without violating data privacy tenets. Risks range from training a product on manipulated datasets to get a desired outcome, to combining datasets that were never intended to be combined and identifying someone specific. Cybersecurity risks imposed by AI need to also be considered, like using generative AI for fraudulent activities such as creating fake documents, invoices, or even impersonating someone else through voice synthesis.

We can expect to see new technologies created to address security and data privacy concerns in an AI world. AI is already being used for good to build security and privacy products. Consumer Reports recently launched Permission Slip, a mobile app that helps people take back control of their personal data companies have. To scale Permission Slip, the team used language models to read through thousands of privacy policies, answer questions about the privacy policy, and pull out the source text, all of which was fed into the app, advising the consumer how to control their data within a new app.

Looking ahead, imagine consumers getting their own “AI Consent Assistant.” Such a tool would move us from static, one-time consent checkboxes to dynamic, ongoing conversations between consumers and platforms, with the AI Consent Assistant acting as a personal guardian to negotiate on our behalf. Or maybe AI tools could be developed to help security teams predict privacy breaches before they happen or proactively auto-redact sensitive information in real-time.

With this in mind, even in companies aspiring to do right by consumers, there will be mistakes. Ultimately, 2024 will be a year of mishaps. Expect to see some potential thrown elbows as well, as the tech industry tries to muscle its wishes through, influencing potential regulations– and as the government responds based on which direction the dollars flow.

Ready to get started?